Slurm是一个开源,容错,高度可扩展的集群管理和作业调度系统,适用于各种规模的Linux集群。 Slurm不需要对其操作进行内核修改,并且相对独立。作为集群工作负载管理器,Slurm有以下特性:

1、它在一段时间内为用户分配对资源(计算节点)的独占和/或非独占访问,以便他们可以执行工作;

2、它提供了一个框架,用于在分配的节点集上启动,执行和监视工作(通常是并行作业);

3、它通过管理待处理工作的队列来仲裁资源争用。

4、它提供作业信息统计,作业状态诊断等工具。

系统:CentOS最小化安装;升级软件补丁,内核;关闭SELinux和防火墙。

Slurm专用账户(slurm):Master端和Node端专用账户统一ID,建议ID号规划为200;

Slurm Master如需要支持GUI命令(sview)则需要安装GUI界面(Server with GUI);

0、安装EPEL源:yum install -y epel-release && yum makecache

[root@localhost ~]# yum install -y epel-release && yum makecache Loaded plugins: fastestmirror Loading mirror speeds from cached hostfile * base: mirrors.aliyun.com * extras: mirrors.aliyun.com * updates: mirrors.aliyun.com base | 3.6 kB 00:00:00 epel | 4.7 kB 00:00:00 extras | 2.9 kB 00:00:00 updates | 2.9 kB 00:00:00 (1/3): epel/x86_64/updateinfo | 1.0 MB 00:00:00 (2/3): updates/7/x86_64/primary_db | 4.5 MB 00:00:01 (3/3): epel/x86_64/primary_db | 6.9 MB 00:00:02 ......此处省略...... (5/9): updates/7/x86_64/other_db | 318 kB 00:00:00 (6/9): updates/7/x86_64/filelists_db | 2.4 MB 00:00:01 (7/9): base/7/x86_64/filelists_db | 7.1 MB 00:00:03 (8/9): epel/x86_64/other_db | 3.3 MB 00:00:04 (9/9): epel/x86_64/filelists_db | 12 MB 00:00:04 Metadata Cache Created

1、安装GUI界面支持GUI命令(sview)并重启:yum groups install -y “Server with GUI” && reboot #仅仅安装GUI包组即可,启动无需切换保持默认即可;

[root@localhost ~]# yum groups install -y "Server with GUI" && reboot Loaded plugins: fastestmirror There is no installed groups file. Maybe run: yum groups mark convert (see man yum) Loading mirror speeds from cached hostfile * base: mirrors.aliyun.com * extras: mirrors.aliyun.com * updates: mirrors.aliyun.com Warning: Group core does not have any packages to install. Resolving Dependencies --> Running transaction check ---> Package ModemManager.x86_64 0:1.6.10-3.el7_6 will be installed --> Processing Dependency: ModemManager-glib(x86-64) = 1.6.10-3.el7_6 for package: ModemManager-1.6.10-3.el7_6.x86_64 --> Processing Dependency: libqmi-utils for package: ModemManager-1.6.10-3.el7_6.x86_64 --> Processing Dependency: libmbim-utils for package: ModemManager-1.6.10-3.el7_6.x86_64 ......此处省略...... xorg-x11-font-utils.x86_64 1:7.5-21.el7 xorg-x11-fonts-Type1.noarch 0:7.5-9.el7 xorg-x11-proto-devel.noarch 0:2018.4-1.el7 xorg-x11-server-common.x86_64 0:1.20.4-10.el7 xorg-x11-server-utils.x86_64 0:7.7-20.el7 xorg-x11-xkb-utils.x86_64 0:7.7-14.el7 yajl.x86_64 0:2.0.4-4.el7 yelp-libs.x86_64 2:3.28.1-1.el7 yelp-xsl.noarch 0:3.28.0-1.el7 zenity.x86_64 0:3.28.1-1.el7 Complete!

2、配置主机名:hostnamectl set-hostname slurm-master #配置后重新连接即可即可生效

[root@localhost ~]# hostnamectl set-hostname slurm-master

3、配置时间服务并同步时间:CentOS7系统默认已采用Chrony时间服务

[root@slurm-master ~]# systemctl status chronyd.service

● chronyd.service - NTP client/server

Loaded: loaded (/usr/lib/systemd/system/chronyd.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2020-09-25 12:54:49 CST; 8min ago

Docs: man:chronyd(8)

man:chrony.conf(5)

Process: 1048 ExecStartPost=/usr/libexec/chrony-helper update-daemon (code=exited, status=0/SUCCESS)

Process: 1003 ExecStart=/usr/sbin/chronyd $OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 1013 (chronyd)

Tasks: 1

CGroup: /system.slice/chronyd.service

└─1013 /usr/sbin/chronyd

Sep 25 12:54:49 localhost.localdomain systemd[1]: Starting NTP client/server...

Sep 25 12:54:49 localhost.localdomain chronyd[1013]: chronyd version 3.4 starting (+CMDMON +NTP +REFCLOCK +RTC +PRIVDROP +SCFILTER +SIGND +ASYN... +DEBUG)

Sep 25 12:54:49 localhost.localdomain chronyd[1013]: Frequency -7.864 +/- 1.309 ppm read from /var/lib/chrony/drift

Sep 25 12:54:49 localhost.localdomain systemd[1]: Started NTP client/server.

Sep 25 12:54:55 localhost.localdomain chronyd[1013]: Selected source 119.28.206.193

Hint: Some lines were ellipsized, use -l to show in full.

a1)注释或删除”server 0.centos.pool.ntp.org iburst”等四行信息

a2)添加”server ntp.aliyun.com iburst”阿里云官方时间效验地址或其它自定义时间效验服务器地址

# These servers were defined in the installation: #server 0.centos.pool.ntp.org iburst #server 1.centos.pool.ntp.org iburst #server 2.centos.pool.ntp.org iburst #server 3.centos.pool.ntp.org iburst server ntp.aliyun.com iburst

a3)取消注释”#allow 192.168.0.0/16″并按需修改为Slurm Node的IP段

# Allow NTP client access from local network. allow 192.168.80.0/24

a4)重启Chrony服务(systemctl restart chronyd.service)并查验(chronyc sources)

[root@slurm-master ~]# systemctl restart chronyd.service [root@slurm-master ~]# chronyc sources 210 Number of sources = 1 MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* 203.107.6.88 2 6 17 9 -288us[-1252us] +/- 16ms

4、部署Munge:目前在线安装的Munge版本为0.5.11

[root@slurm-master ~]# yum install -y munge munge-libs munge-devel Loaded plugins: fastestmirror, langpacks Loading mirror speeds from cached hostfile * base: mirrors.aliyun.com * extras: mirrors.aliyun.com * updates: mirrors.aliyun.com Resolving Dependencies --> Running transaction check ---> Package munge.x86_64 0:0.5.11-3.el7 will be installed ---> Package munge-devel.x86_64 0:0.5.11-3.el7 will be installed ---> Package munge-libs.x86_64 0:0.5.11-3.el7 will be installed --> Finished Dependency Resolution Dependencies Resolved ========================================================================================================================================================== Package Arch Version Repository Size ========================================================================================================================================================== Installing: munge x86_64 0.5.11-3.el7 epel 95 k munge-devel x86_64 0.5.11-3.el7 epel 22 k munge-libs x86_64 0.5.11-3.el7 epel 37 k Transaction Summary ========================================================================================================================================================== Install 3 Packages Total download size: 154 k Installed size: 341 k Downloading packages: (1/3): munge-devel-0.5.11-3.el7.x86_64.rpm | 22 kB 00:00:00 (2/3): munge-0.5.11-3.el7.x86_64.rpm | 95 kB 00:00:00 (3/3): munge-libs-0.5.11-3.el7.x86_64.rpm | 37 kB 00:00:00 ---------------------------------------------------------------------------------------------------------------------------------------------------------- Total 529 kB/s | 154 kB 00:00:00 Running transaction check Running transaction test Transaction test succeeded Running transaction Installing : munge-libs-0.5.11-3.el7.x86_64 1/3 Installing : munge-0.5.11-3.el7.x86_64 2/3 Installing : munge-devel-0.5.11-3.el7.x86_64 3/3 Verifying : munge-0.5.11-3.el7.x86_64 1/3 Verifying : munge-devel-0.5.11-3.el7.x86_64 2/3 Verifying : munge-libs-0.5.11-3.el7.x86_64 3/3 Installed: munge.x86_64 0:0.5.11-3.el7 munge-devel.x86_64 0:0.5.11-3.el7 munge-libs.x86_64 0:0.5.11-3.el7 Complete!

[root@slurm-master ~]# chmod -R 0700 /etc/munge /var/log/munge && chmod -R 0711 /var/lib/munge && chmod -R 0755 /var/run/munge

c1)生成Munge秘钥文件:以下两种方式任选一种即可,文件存储在/etc/munge目录中;后期相关的Slurm节点都需同步此文件

等待一些随机数据(推荐给偏执狂):dd if=/dev/random bs=1 count=1024 >/etc/munge/munge.key

抓取一些伪随机数据(建议不耐烦):dd if=/dev/urandom bs=1 count=1024 >/etc/munge/munge.key

[root@slurm-master ~]# dd if=/dev/urandom bs=1 count=1024 >/etc/munge/munge.key 1024+0 records in 1024+0 records out 1024 bytes (1.0 kB) copied, 0.00257561 s, 398 kB/s

c2)授权Munge秘钥文件:chown munge:munge /etc/munge/munge.key && chmod 0600 /etc/munge/munge.key

chown munge:munge /etc/munge/munge.key && chmod 0600 /etc/munge/munge.key

c3)启动Munge服务并配置服务自启动:systemctl start munge.service && systemctl enable munge.service

[root@slurm-master ~]# systemctl start munge.service && systemctl enable munge.service Created symlink from /etc/systemd/system/multi-user.target.wants/munge.service to /usr/lib/systemd/system/munge.service.

c4)本机验证:munge -n | unmunge

[root@slurm-master ~]# munge -n | unmunge STATUS: Success (0) ENCODE_HOST: slurm-master (192.168.80.250) ENCODE_TIME: 2020-09-25 13:29:06 +0800 (1601011746) DECODE_TIME: 2020-09-25 13:29:06 +0800 (1601011746) TTL: 300 CIPHER: aes128 (4) MAC: sha1 (3) ZIP: none (0) UID: root (0) GID: root (0) LENGTH: 0

5、安装所需组件:yum install -y rpm-build bzip2-devel openssl openssl-devel zlib-devel perl-DBI perl-ExtUtils-MakeMaker pam-devel readline-devel mariadb-devel python3 gtk2 gtk2-devel

[root@slurm-master ~]# yum install -y rpm-build bzip2-devel openssl openssl-devel zlib-devel perl-DBI perl-ExtUtils-MakeMaker pam-devel readline-devel mariadb-devel python3 gtk2 gtk2-devel Loaded plugins: fastestmirror, langpacks Loading mirror speeds from cached hostfile * base: mirrors.aliyun.com * extras: mirrors.aliyun.com * updates: mirrors.aliyun.com Package rpm-build-4.11.3-43.el7.x86_64 already installed and latest version Package 1:openssl-1.0.2k-19.el7.x86_64 already installed and latest version Package perl-DBI-1.627-4.el7.x86_64 already installed and latest version Package gtk2-2.24.31-1.el7.x86_64 already installed and latest version Resolving Dependencies --> Running transaction check ---> Package bzip2-devel.x86_64 0:1.0.6-13.el7 will be installed ---> Package gtk2-devel.x86_64 0:2.24.31-1.el7 will be installed --> Processing Dependency: pango-devel >= 1.20.0-1 for package: gtk2-devel-2.24.31-1.el7.x86_64 --> Processing Dependency: glib2-devel >= 2.28.0-1 for package: gtk2-devel-2.24.31-1.el7.x86_64 --> Processing Dependency: cairo-devel >= 1.6.0-1 for package: gtk2-devel-2.24.31-1.el7.x86_64 --> Processing Dependency: atk-devel >= 1.29.4-2 for package: gtk2-devel-2.24.31-1.el7.x86_64 --> Processing Dependency: pkgconfig(pangoft2) for package: gtk2-devel-2.24.31-1.el7.x86_64 ......此处省略...... mesa-khr-devel.x86_64 0:18.3.4-7.el7_8.1 mesa-libEGL-devel.x86_64 0:18.3.4-7.el7_8.1 mesa-libGL-devel.x86_64 0:18.3.4-7.el7_8.1 ncurses-devel.x86_64 0:5.9-14.20130511.el7_4 pango-devel.x86_64 0:1.42.4-4.el7_7 pcre-devel.x86_64 0:8.32-17.el7 perl-ExtUtils-Install.noarch 0:1.58-295.el7 perl-ExtUtils-Manifest.noarch 0:1.61-244.el7 perl-ExtUtils-ParseXS.noarch 1:3.18-3.el7 perl-devel.x86_64 4:5.16.3-295.el7 pixman-devel.x86_64 0:0.34.0-1.el7 pyparsing.noarch 0:1.5.6-9.el7 python3-libs.x86_64 0:3.6.8-13.el7 python3-pip.noarch 0:9.0.3-7.el7_7 python3-setuptools.noarch 0:39.2.0-10.el7 systemtap-sdt-devel.x86_64 0:4.0-11.el7 Complete!

6、部署Slurm程序

[root@slurm-master ~]# groupadd -g 200 slurm && useradd -u 200 -g 200 -s /sbin/nologin -M slurm

[root@slurm-master ~]# cd && wget https://download.schedmd.com/slurm/slurm-20.02.5.tar.bz2 --2020-09-25 13:37:50-- https://download.schedmd.com/slurm/slurm-20.02.5.tar.bz2 Resolving download.schedmd.com (download.schedmd.com)... 71.19.154.210 Connecting to download.schedmd.com (download.schedmd.com)|71.19.154.210|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 6325393 (6.0M) [application/x-bzip2] Saving to: ‘slurm-20.02.5.tar.bz2’ 100%[================================================================================================================>] 6,325,393 12.1KB/s in 8m 48s 2020-09-25 13:46:42 (11.7 KB/s) - ‘slurm-20.02.5.tar.bz2’ saved [6325393/6325393]

[root@slurm-master ~]# rpmbuild -ta --clean slurm-20.02.5.tar.bz2 Executing(%prep): /bin/sh -e /var/tmp/rpm-tmp.yM0uEb + umask 022 + cd /root/rpmbuild/BUILD + cd /root/rpmbuild/BUILD + rm -rf slurm-20.02.5 + /usr/bin/bzip2 -dc /root/slurm-20.02.5.tar.bz2 + /usr/bin/tar -xvvf - drwxr-xr-x 1000/1000 0 2020-09-11 04:56 slurm-20.02.5/ -rw-r--r-- 1000/1000 8543 2020-09-11 04:56 slurm-20.02.5/LICENSE.OpenSSL drwxr-xr-x 1000/1000 0 2020-09-11 04:56 slurm-20.02.5/auxdir/ -rw-r--r-- 1000/1000 306678 2020-09-11 04:56 slurm-20.02.5/auxdir/libtool.m4 -rw-r--r-- 1000/1000 5860 2020-09-11 04:56 slurm-20.02.5/auxdir/ax_gcc_builtin.m4 -rwxr-xr-x 1000/1000 15368 2020-09-11 04:56 slurm-20.02.5/auxdir/install-sh -rw-r--r-- 1000/1000 327116 2020-09-11 04:56 slurm-20.02.5/auxdir/ltmain.sh -rw-r--r-- 1000/1000 2630 2020-09-11 04:56 slurm-20.02.5/auxdir/x_ac_freeipmi.m4 -rw-r--r-- 1000/1000 1783 2020-09-11 04:56 slurm-20.02.5/auxdir/x_ac_yaml.m4 -rw-r--r-- 1000/1000 2709 2020-09-11 04:56 slurm-20.02.5/auxdir/x_ac_databases.m4 -rw-r--r-- 1000/1000 2018 2020-09-11 04:56 slurm-20.02.5/auxdir/x_ac_http_parser.m4 -rwxr-xr-x 1000/1000 36136 2020-09-11 04:56 slurm-20.02.5/auxdir/config.sub -rwxr-xr-x 1000/1000 23568 2020-09-11 04:56 slurm-20.02.5/auxdir/depcomp ......此处省略...... Checking for unpackaged file(s): /usr/lib/rpm/check-files /root/rpmbuild/BUILDROOT/slurm-20.02.5-1.el7.x86_64 Wrote: /root/rpmbuild/SRPMS/slurm-20.02.5-1.el7.src.rpm Wrote: /root/rpmbuild/RPMS/x86_64/slurm-20.02.5-1.el7.x86_64.rpm Wrote: /root/rpmbuild/RPMS/x86_64/slurm-perlapi-20.02.5-1.el7.x86_64.rpm Wrote: /root/rpmbuild/RPMS/x86_64/slurm-devel-20.02.5-1.el7.x86_64.rpm Wrote: /root/rpmbuild/RPMS/x86_64/slurm-example-configs-20.02.5-1.el7.x86_64.rpm Wrote: /root/rpmbuild/RPMS/x86_64/slurm-slurmctld-20.02.5-1.el7.x86_64.rpm Wrote: /root/rpmbuild/RPMS/x86_64/slurm-slurmd-20.02.5-1.el7.x86_64.rpm Wrote: /root/rpmbuild/RPMS/x86_64/slurm-slurmdbd-20.02.5-1.el7.x86_64.rpm Wrote: /root/rpmbuild/RPMS/x86_64/slurm-libpmi-20.02.5-1.el7.x86_64.rpm Wrote: /root/rpmbuild/RPMS/x86_64/slurm-torque-20.02.5-1.el7.x86_64.rpm Wrote: /root/rpmbuild/RPMS/x86_64/slurm-openlava-20.02.5-1.el7.x86_64.rpm Wrote: /root/rpmbuild/RPMS/x86_64/slurm-contribs-20.02.5-1.el7.x86_64.rpm Wrote: /root/rpmbuild/RPMS/x86_64/slurm-pam_slurm-20.02.5-1.el7.x86_64.rpm Executing(%clean): /bin/sh -e /var/tmp/rpm-tmp.ojihVc + umask 022 + cd /root/rpmbuild/BUILD + cd slurm-20.02.5 + rm -rf /root/rpmbuild/BUILDROOT/slurm-20.02.5-1.el7.x86_64 + exit 0 Executing(--clean): /bin/sh -e /var/tmp/rpm-tmp.lzdQo2 + umask 022 + cd /root/rpmbuild/BUILD + rm -rf slurm-20.02.5 + exit 0

[root@slurm-master ~]# cd /root/rpmbuild/RPMS/x86_64 && yum install -y slurm-*.rpm Loaded plugins: fastestmirror, langpacks Examining slurm-20.02.5-1.el7.x86_64.rpm: slurm-20.02.5-1.el7.x86_64 Marking slurm-20.02.5-1.el7.x86_64.rpm to be installed Examining slurm-contribs-20.02.5-1.el7.x86_64.rpm: slurm-contribs-20.02.5-1.el7.x86_64 Marking slurm-contribs-20.02.5-1.el7.x86_64.rpm to be installed Examining slurm-devel-20.02.5-1.el7.x86_64.rpm: slurm-devel-20.02.5-1.el7.x86_64 Marking slurm-devel-20.02.5-1.el7.x86_64.rpm to be installed Examining slurm-example-configs-20.02.5-1.el7.x86_64.rpm: slurm-example-configs-20.02.5-1.el7.x86_64 ......此处省略...... Verifying : slurm-libpmi-20.02.5-1.el7.x86_64 12/13 Verifying : slurm-perlapi-20.02.5-1.el7.x86_64 13/13 Installed: slurm.x86_64 0:20.02.5-1.el7 slurm-contribs.x86_64 0:20.02.5-1.el7 slurm-devel.x86_64 0:20.02.5-1.el7 slurm-example-configs.x86_64 0:20.02.5-1.el7 slurm-libpmi.x86_64 0:20.02.5-1.el7 slurm-openlava.x86_64 0:20.02.5-1.el7 slurm-pam_slurm.x86_64 0:20.02.5-1.el7 slurm-perlapi.x86_64 0:20.02.5-1.el7 slurm-slurmctld.x86_64 0:20.02.5-1.el7 slurm-slurmd.x86_64 0:20.02.5-1.el7 slurm-slurmdbd.x86_64 0:20.02.5-1.el7 slurm-torque.x86_64 0:20.02.5-1.el7 Dependency Installed: perl-Switch.noarch 0:2.16-7.el7 Complete!

e1)拷贝配置文件模版:cd /etc/slurm/ && cp slurm.conf.example slurm.conf

[root@slurm-master x86_64]# cd /etc/slurm/ && cp slurm.conf.example slurm.conf

e2)编辑配置文件:vim slurm.conf

ClusterName=slurm-cluster #定义Slurm集群名称

ControlMachine=slurm-master #定义Slurm Master的HostName

ControlAddr=192.168.80.250 #定义Slurm Master的IP地址(如果内部DNS服务或本地Hosts文件解析了Slurm Master的HostName此项保持默认即可;如没有DNS服务时则需要根据Master端的实际IP地址配置;建议不管有无DNS服务都配置)

# See the slurm.conf man page for more information. # ClusterName=slurm-cluster ControlMachine=slurm-master ControlAddr=192.168.80.250

ReturnToService=2 #避免节点意外重启处于Down状态,默认”0″;建议”2″,重启后自动恢复成idle状态;

#FirstJobId= ReturnToService=2 #MaxJobCount=

NodeName=linux[1-32] Procs=1 State=UNKNOWN #默认项,建议直接注释保留,另起一行配置Slurm计算节点信息(Slurm Master控制端无需配置);

说明:

NodeName=slurm-node[1-32]:#配置节点主机名;编号格式:独立的”NodeName1″、连续的(含不连续的)”NodeName[2-3,4,6,7-10]”;

NodeAddr=x.x.x.x:#配置对应节点的IPv4地址;当集群环境有DNS服务时此项可不配置;

#NodeName=linux[1-32] Procs=1 State=UNKNOWN NodeName=slurm-node[1-32] NodeAddr=192.168.80.251 Procs=1 State=UNKNOWN

PartitionName=debug Nodes=ALL Default=YES MaxTime=INFINITE State=UP #默认项,建议直接注释保留,另起一行配置分区队列信息(Slurm Master控制端无需配置);

说明:

PartitionName=debug:#计算分区队列;默认”debug”;可按需自定义;

Nodes=ALL:#表示分区队列内包含的计算节点数量;默认”ALL”;可按需自定义,节点编号格式:独立的”NodeName1″、连续的(含不连续的)”NodeName[2-3,4,6,7-10]”;

Default=YES:#默认计算队列分区;表示Slurm在提交任务时不指定分区队列时默认使用的计算分区队列;多分区队列模式下只能一个分区配置YES模式;

MaxTime=INFINITE:#计算任务的最大时间限制(默认单位为分钟),默认”INFINITE”无限;保持默认即可;

State=UP:#分区队列状态,默认”UP”有效;保持默认即可;

#PartitionName=debug Nodes=ALL Default=YES MaxTime=INFINITE State=UP PartitionName=debug Nodes=slrum-node[1-32] Default=YES MaxTime=INFINITE State=UP

e3)创建配套目录及授权配套目录:mkdir /var/spool/slurm && chown slurm:slurm /var/spool/slurm

[root@slurm-master slurm]# mkdir /var/spool/slurm && chown slurm:slurm /var/spool/slurm

e4)启动Slurm Master服务并设置服务自启动:systemctl start slurmctld.service && systemctl enable slurmctld.service

[root@slurm-master slurm]# systemctl start slurmctld.service && systemctl enable slurmctld.service Created symlink from /etc/systemd/system/multi-user.target.wants/slurmctld.service to /usr/lib/systemd/system/slurmctld.service.

[root@slurm-master slurm]# systemctl status slurmctld.service

● slurmctld.service - Slurm controller daemon

Loaded: loaded (/usr/lib/systemd/system/slurmctld.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2020-09-25 14:16:13 CST; 3s ago

Process: 73587 ExecStart=/usr/sbin/slurmctld $SLURMCTLD_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 73589 (slurmctld)

Tasks: 7

CGroup: /system.slice/slurmctld.service

└─73589 /usr/sbin/slurmctld

Sep 25 14:16:13 slurm-master systemd[1]: Starting Slurm controller daemon...

Sep 25 14:16:13 slurm-master systemd[1]: Can't open PID file /var/run/slurmctld.pid (yet?) after start: No such file or directory

Sep 25 14:16:13 slurm-master systemd[1]: Started Slurm controller daemon.

[root@slurm-master slurm]# sinfo PARTITION AVAIL TIMELIMIT NODES STATE NODELIST debug* up infinite 1 unk* slurm-node1

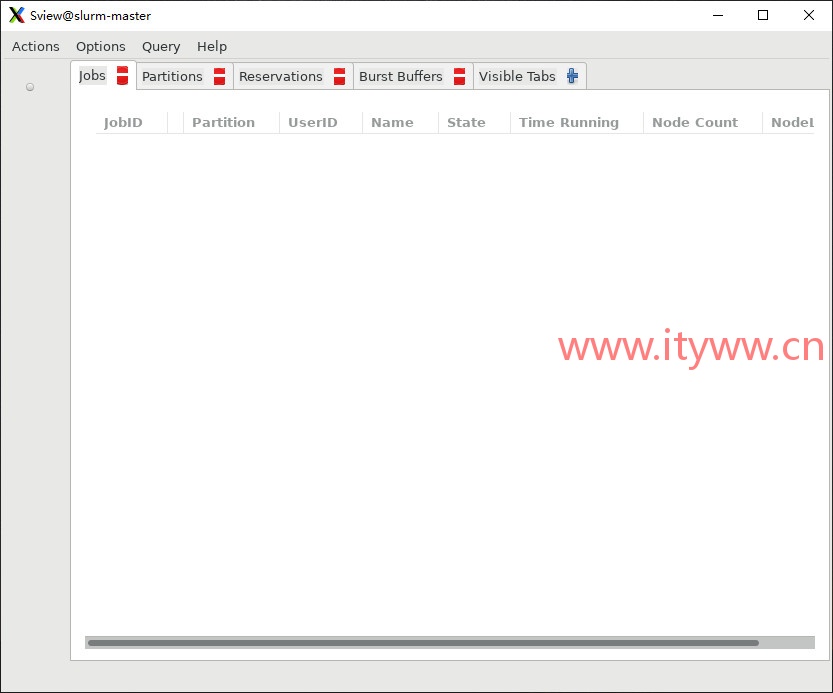

[root@slurm-master slurm]# sview