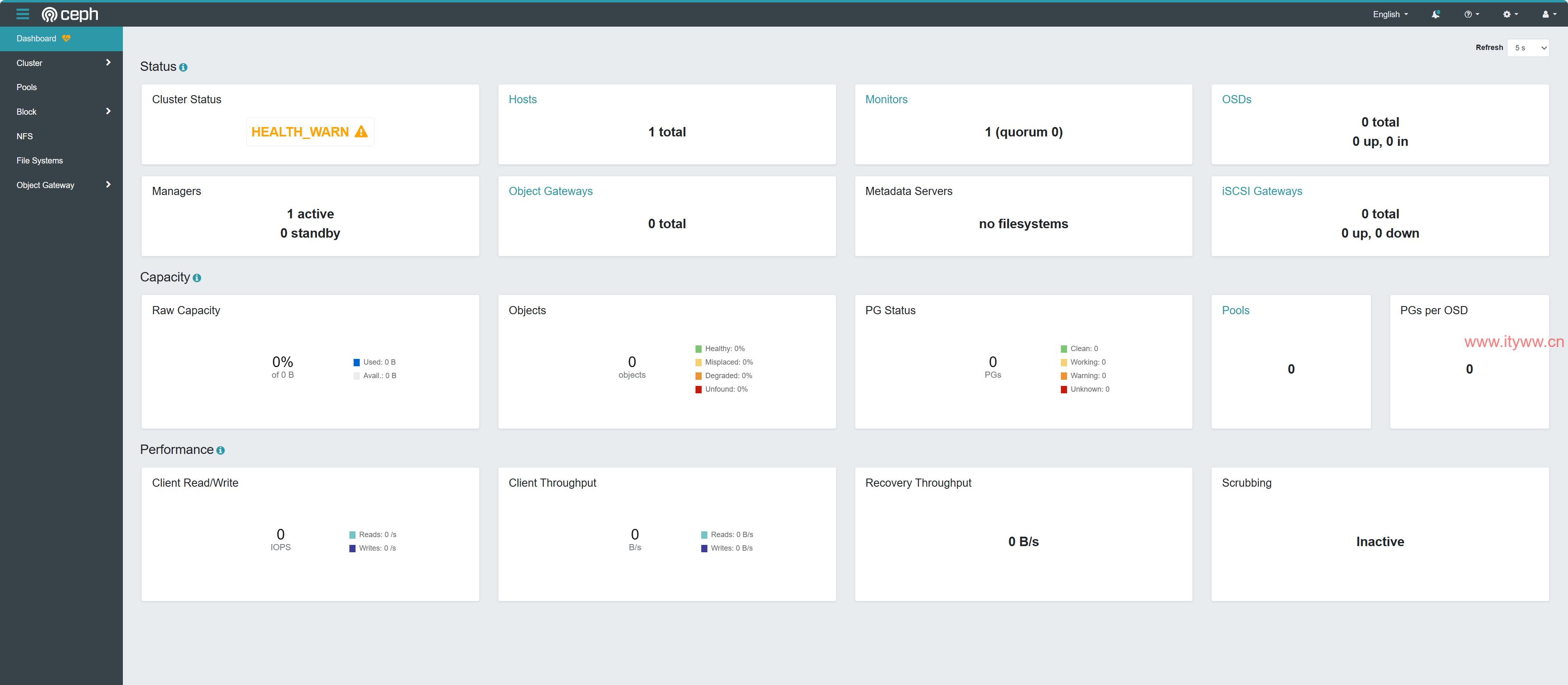

Ceph集群部署请参考文章

一、概述 Ceph是在一个统一系统中提供对象、块和文件存储服务的开源分布式存储系统。 1、部署版本:本篇教程发 […]

一、阐述

Ceph 块设备(Ceph-RBD)是一种基于精简配置和可调整大小的存储解决方案,它利用了 RADOS 的功能,如快照、复制和一致性。这些块设备通过内核模块或 librbd 库与 OSD 进行交互,为包括 KVM(如 QEMU)在内的虚拟机环境以及基于云的计算系统(如 OpenStack 和 CloudStack)提供高性能和无限可扩展性。这些系统通常依赖于 libvirt 和 QEMU 与 Ceph 块设备的集成。Ceph 的块设备接口适用于多种存储介质,如 HHD、SDS、CD、软盘甚至磁带,使其成为与海量数据存储交互的理想选择。用户可以在同一集群中同时操作 Ceph RADOS 网关、Ceph 文件系统和 Ceph 块设备。

二、RBD存储池初始化

1、创建RBD池

[root@hydrogen1 ~]# ceph osd pool create rbd 64 64 #创建池 pool 'rbd' created [root@hydrogen1 ~]# ceph osd pool ls #查询池 device_health_metrics rbd

2、配置关联RBD池应用程序(可以理解为类型),具体来说,它告诉Ceph OSD(对象存储守护进程)将名为“rbd”的存储池用于RBD(RADOS块设备)应用程序。这样,RBD应用程序就可以使用这个存储池来存储和管理块设备。

[root@hydrogen1 ~]# ceph osd pool application enable rbd rbd #前一个rbd表示池名称,后一个rbd表示应用程序名称。 enabled application 'rbd' on pool 'rbd'

三、RBD基础命令

1、列出块设备列表:rbd list PoolName #当池名称为“rbd”时可忽略直接查询

[root@hydrogen1 ~]# rbd list rbd 9e6c2f1eddc8 [root@hydrogen1 ~]# rbd list 9e6c2f1eddc8

2、显示块设备大小等信息:rbd info PoolName/RBDName #当池名称为“rbd”时可忽略直接查询

[root@hydrogen1 ~]# rbd info rbd/9e6c2f1eddc8

rbd image '9e6c2f1eddc8':

size 20 GiB in 5120 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: da4f6ef53937

block_name_prefix: rbd_data.da4f6ef53937

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Fri Sep 27 13:35:18 2024

access_timestamp: Fri Sep 27 13:35:18 2024

modify_timestamp: Fri Sep 27 13:35:18 2024

[root@hydrogen1 ~]# rbd info 9e6c2f1eddc8

rbd image '9e6c2f1eddc8':

size 20 GiB in 5120 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: da4f6ef53937

block_name_prefix: rbd_data.da4f6ef53937

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Fri Sep 27 13:35:18 2024

access_timestamp: Fri Sep 27 13:35:18 2024

modify_timestamp: Fri Sep 27 13:35:18 2024

3、创建块设备:rbd create PoolName/RBDName -s Size #Size默认单位为M,单位[M,G,T]。

[root@hydrogen1 ~]# rbd create rbd/rbdtest -s 10G

[root@hydrogen1 ~]# rbd ls

9e6c2f1eddc8

rbdtest

[root@hydrogen1 ~]# rbd info rbdtest

rbd image 'rbdtest':

size 10 GiB in 2560 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: dc1774207e60

block_name_prefix: rbd_data.dc1774207e60

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Fri Sep 27 16:38:45 2024

access_timestamp: Fri Sep 27 16:38:45 2024

modify_timestamp: Fri Sep 27 16:38:45 2024

4、调整块设备大小:rbd resize PoolName/RBDName -s Size #Size默认单位为M,单位[M,G,T];此容量不是追加的概念,定义多大就是多大的意思;

[root@hydrogen1 ~]# rbd resize rbd/rbdtest -s 20G

Resizing image: 100% complete...done.

[root@hydrogen1 ~]# rbd info rbdtest

rbd image 'rbdtest':

size 20 GiB in 5120 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: dc1774207e60

block_name_prefix: rbd_data.dc1774207e60

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Fri Sep 27 16:38:45 2024

access_timestamp: Fri Sep 27 16:38:45 2024

modify_timestamp: Fri Sep 27 16:38:45 2024

5、删除块设备:rbd remove PoolName/RBDName #当池名称为“rbd”时可忽略直接删除

[root@hydrogen1 ~]# rbd remove rbd/rbdtest Removing image: 100% complete...done. [root@hydrogen1 ~]# rbd ls 9e6c2f1eddc8 [root@hydrogen1 ~]# rbd remove rbdtest Removing image: 100% complete...done. [root@hydrogen1 ~]# rbd ls 9e6c2f1eddc8