一、概述

Ceph是在一个统一系统中提供对象、块和文件存储服务的开源分布式存储系统。

1、部署版本:本篇教程发布时,Ceph的在线版本为Reef18和Quincy17。可在官方网站上查看最新的版本信息:https://docs.ceph.com/en/latest/releases/。由于这是一个存储系统,稳定性是首要考虑的因素。目前,在线版本仍在不断迭代中,因此使用归档版本中的最新稳定版Pacific16。

2、操作系统:Ceph Pacific版本目前仅支持8系列的操作系统,因此推荐使用Rocky Linux 8.10系统。

3、集群架构:根据官方推荐的部署方法,集群架构采用标准的三台服务器配置。

二、环境准备(以下所有操作在所有节点执行)

1、配置Ceph所有节点主机名,例如:ceph1、ceph2、ceph3,此处不再赘述自行配置。

2、配置Host文件(/etc/hosts)所有节点的本地解析记录

cat >> /etc/hosts <<EOF 172.16.100.131 hydrogen1 172.16.100.132 hydrogen2 172.16.100.133 hydrogen3 EOF

3、配置系统镜像源:参考阿里云镜像源,此处不再赘述自行配置。

4、配置时间同步服务(时间不同步会造成Ceph集群健康状况告警):参考Crondy时间同步服务,此处不再赘述自行配置。

5、安装Python3.6:dnf install -y python36 #此处不再赘述自行配置

6、安装Docker服务:参考Docker安装部署,此处不再赘述自行配置。

安装Docker-CE 1、安装必要的一些系统工具:yum install -y yum-utils devi […]

三、部署Ceph集群

1、安装Cephadm(以下所有操作在Ceph节点1执行)

a)获取cephadm文件:curl –silent –remote-name –location https://github.com/ceph/ceph/raw/pacific/src/cephadm/cephadm

[root@hydrogen1 ~]# curl --silent --remote-name --location https://github.com/ceph/ceph/raw/pacific/src/cephadm/cephadm [root@hydrogen1 ~]# ll total 368 -rw-------. 1 root root 1742 Sep 24 14:44 anaconda-ks.cfg -rw-r--r-- 1 root root 355729 Sep 26 11:28 cephadm

b)配置可执行权限:chmod +x cephadm

[root@hydrogen1 ~]# chmod +x cephadm [root@hydrogen1 ~]# ll total 368 -rw-------. 1 root root 1742 Sep 24 14:44 anaconda-ks.cfg -rwxr-xr-x 1 root root 355729 Sep 26 11:28 cephadm

c)添加Ceph镜像源地址:./cephadm add-repo –release pacific

[root@hydrogen1 ~]# ./cephadm add-repo --release pacific Writing repo to /etc/yum.repos.d/ceph.repo... Enabling EPEL... Completed adding repo.

d)备份镜像源地址:cp /etc/yum.repos.d/ceph.repo{,.bak}

[root@hydrogen1 ~]# cp /etc/yum.repos.d/ceph.repo{,.bak}

[root@hydrogen1 ~]# ll /etc/yum.repos.d/ceph.repo*

-rw-r--r-- 1 root root 477 Sep 26 11:31 /etc/yum.repos.d/ceph.repo

-rw-r--r-- 1 root root 477 Sep 26 11:34 /etc/yum.repos.d/ceph.repo.bak

e)修改镜像源为国内阿里云地址:sed -i ‘s#download.ceph.com#mirrors.aliyun.com/ceph#’ /etc/yum.repos.d/ceph.repo

[root@hydrogen1 ~]# sed -i 's#download.ceph.com#mirrors.aliyun.com/ceph#' /etc/yum.repos.d/ceph.repo [root@hydrogen1 ~]#

f)安装cephadm命令:./cephadm install

[root@hydrogen1 ~]# ./cephadm install Installing packages ['cephadm']...

g)检验cephadm已加入PATH环境变量

[root@hydrogen1 ~]# which cephadm /usr/sbin/cephadm

2、Ceph集群部署(以下所有操作在Ceph节点1执行)

a)引导创建集群:cephadm bootstrap –mon-ip 172.16.100.131

[root@hydrogen1 ~]# cephadm bootstrap --mon-ip 172.16.100.131

Creating directory /etc/ceph for ceph.conf

Verifying podman|docker is present...

Verifying lvm2 is present...

Verifying time synchronization is in place...

Unit chronyd.service is enabled and running

Repeating the final host check...

docker (/usr/bin/docker) is present

systemctl is present

lvcreate is present

Unit chronyd.service is enabled and running

Host looks OK

Cluster fsid: fe840364-7bc6-11ef-98cd-e43d1a0a3210

Verifying IP 172.16.100.131 port 3300 ...

Verifying IP 172.16.100.131 port 6789 ...

Mon IP `172.16.100.131` is in CIDR network `172.16.100.0/24`

Mon IP `172.16.100.131` is in CIDR network `172.16.100.0/24`

Internal network (--cluster-network) has not been provided, OSD replication will default to the public_network

Pulling container image quay.io/ceph/ceph:v16...

Ceph version: ceph version 16.2.15 (618f440892089921c3e944a991122ddc44e60516) pacific (stable)

Extracting ceph user uid/gid from container image...

Creating initial keys...

Creating initial monmap...

Creating mon...

Waiting for mon to start...

Waiting for mon...

mon is available

Assimilating anything we can from ceph.conf...

Generating new minimal ceph.conf...

Restarting the monitor...

Setting public_network to 172.16.100.0/24 in mon config section

Wrote config to /etc/ceph/ceph.conf

Wrote keyring to /etc/ceph/ceph.client.admin.keyring

Creating mgr...

Verifying port 9283 ...

Waiting for mgr to start...

Waiting for mgr...

mgr not available, waiting (1/15)...

mgr not available, waiting (2/15)...

mgr not available, waiting (3/15)...

mgr is available

Enabling cephadm module...

Waiting for the mgr to restart...

Waiting for mgr epoch 5...

mgr epoch 5 is available

Setting orchestrator backend to cephadm...

Generating ssh key...

Wrote public SSH key to /etc/ceph/ceph.pub

Adding key to root@localhost authorized_keys...

Adding host hydrogen1...

Deploying mon service with default placement...

Deploying mgr service with default placement...

Deploying crash service with default placement...

Deploying prometheus service with default placement...

Deploying grafana service with default placement...

Deploying node-exporter service with default placement...

Deploying alertmanager service with default placement...

Enabling the dashboard module...

Waiting for the mgr to restart...

Waiting for mgr epoch 9...

mgr epoch 9 is available

Generating a dashboard self-signed certificate...

Creating initial admin user...

Fetching dashboard port number...

Ceph Dashboard is now available at:

URL: https://hydrogen1:8443/

User: admin

Password: 87uqmju6ia

Enabling client.admin keyring and conf on hosts with "admin" label

Enabling autotune for osd_memory_target

You can access the Ceph CLI as following in case of multi-cluster or non-default config:

sudo /usr/sbin/cephadm shell --fsid fe840364-7bc6-11ef-98cd-e43d1a0a3210 -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

Or, if you are only running a single cluster on this host:

sudo /usr/sbin/cephadm shell

Please consider enabling telemetry to help improve Ceph:

ceph telemetry on

For more information see:

https://docs.ceph.com/en/pacific/mgr/telemetry/

Bootstrap complete.

1、在本地主机上为新集群创建监视器和管理器守护程序。

2、为Ceph集群生成新的SSH密钥并将其添加到root用户认证文件(/root/.ssh/authorized_keys)中。

3、将公钥的副本写入/etc/ceph/ceph.pub文件中。

4、将与新群集通信所需的最小配置写入到Ceph配置文件(/etc/ceph/ceph.conf)中。

5、将管理(特权)密钥的副本写入客户端认证文件(/etc/ceph/ceph.client.admin.keyring)中。

6、将管理标签(_admin)添加到引导主机。

b)查看当前配置文件

[root@hydrogen1 ~]# ll /etc/ceph/ total 12 -rw------- 1 root root 151 Sep 26 13:25 ceph.client.admin.keyring -rw-r--r-- 1 root root 179 Sep 26 13:25 ceph.conf -rw-r--r-- 1 root root 595 Sep 26 13:20 ceph.pub

c)查看当前拉取的镜像及容器运行状态

[root@hydrogen1 ~]# docker image ls REPOSITORY TAG IMAGE ID CREATED SIZE quay.io/ceph/ceph v16 3c4eff6082ae 4 months ago 1.19GB quay.io/ceph/ceph-grafana 8.3.5 dad864ee21e9 2 years ago 558MB quay.io/prometheus/prometheus v2.33.4 514e6a882f6e 2 years ago 204MB quay.io/prometheus/node-exporter v1.3.1 1dbe0e931976 2 years ago 20.9MB quay.io/prometheus/alertmanager v0.23.0 ba2b418f427c 3 years ago 57.5MB [root@hydrogen1 ~]# docker container ls CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 84f3a77dab71 quay.io/ceph/ceph-grafana:8.3.5 "/bin/sh -c 'grafana…" 11 minutes ago Up 11 minutes ceph-fe840364-7bc6-11ef-98cd-e43d1a0a3210-grafana-hydrogen1 94d484af6019 quay.io/prometheus/alertmanager:v0.23.0 "/bin/alertmanager -…" 11 minutes ago Up 11 minutes ceph-fe840364-7bc6-11ef-98cd-e43d1a0a3210-alertmanager-hydrogen1 7ed97385015b quay.io/prometheus/prometheus:v2.33.4 "/bin/prometheus --c…" 12 minutes ago Up 11 minutes ceph-fe840364-7bc6-11ef-98cd-e43d1a0a3210-prometheus-hydrogen1 98f5eb2a8a51 quay.io/prometheus/node-exporter:v1.3.1 "/bin/node_exporter …" 13 minutes ago Up 13 minutes ceph-fe840364-7bc6-11ef-98cd-e43d1a0a3210-node-exporter-hydrogen1 e5538bda843d quay.io/ceph/ceph "/usr/bin/ceph-crash…" 14 minutes ago Up 14 minutes ceph-fe840364-7bc6-11ef-98cd-e43d1a0a3210-crash-hydrogen1 3e7f7281eafb quay.io/ceph/ceph:v16 "/usr/bin/ceph-mgr -…" 18 minutes ago Up 18 minutes ceph-fe840364-7bc6-11ef-98cd-e43d1a0a3210-mgr-hydrogen1-knyqls 2978568d1150 quay.io/ceph/ceph:v16 "/usr/bin/ceph-mon -…" 18 minutes ago Up 18 minutes ceph-fe840364-7bc6-11ef-98cd-e43d1a0a3210-mon-hydrogen1

1、ceph-mgr ceph管理程序

2、ceph-monitor ceph监视器

3、ceph-crash 崩溃数据收集模块

4、prometheus prometheus监控组件

5、grafana prometheus监控数据展示组件

6、alertmanager prometheus告警组件

7、node_exporter prometheus节点数据收集组件

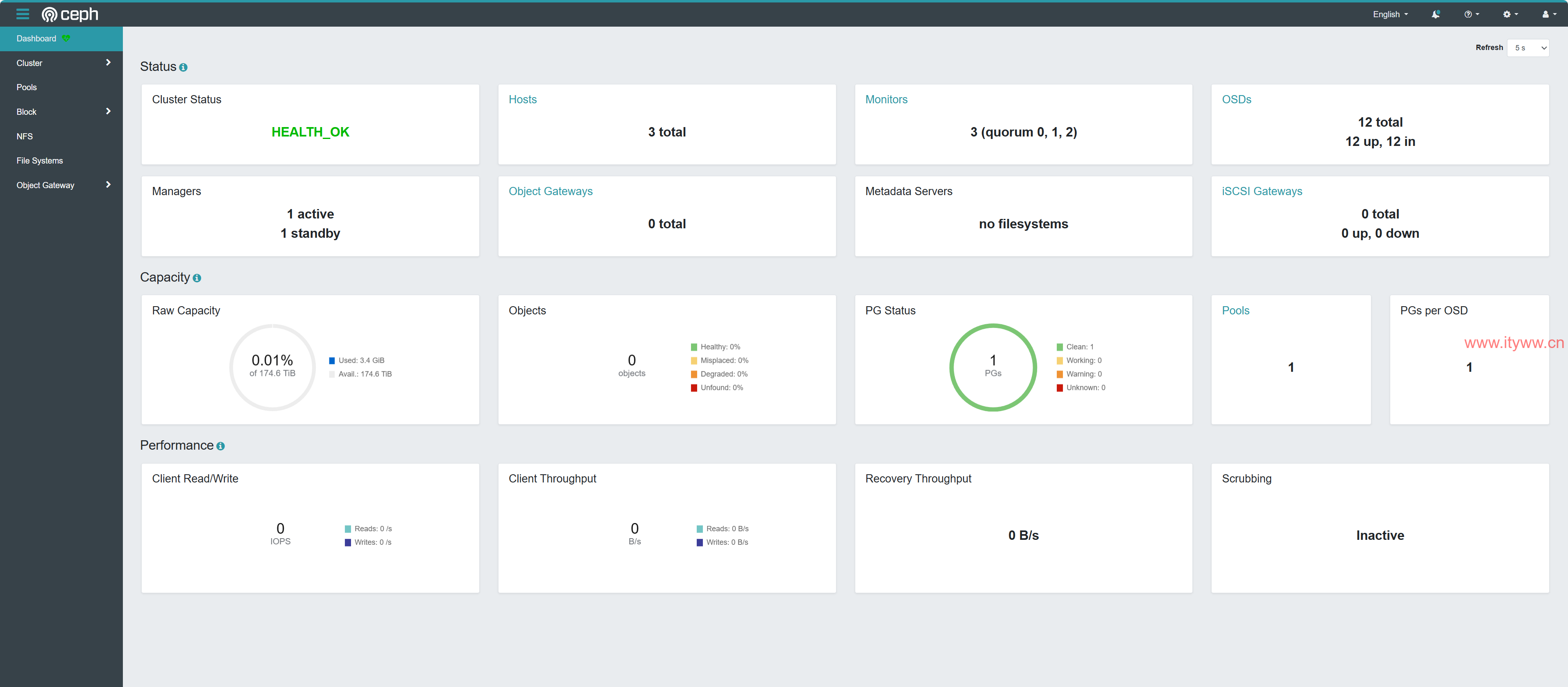

d)通过集群创建完成后提供的访问URL地址(https://hydrogen1:8443/)和账户信息可访问Ceph Dashboard

e)安装Ceph命令工具:dnf install -y ceph-common

[root@hydrogen1 ~]# dnf install -y ceph-common Last metadata expiration check: 2:31:59 ago on Thu 26 Sep 2024 11:38:15 AM CST. Dependencies resolved. ================================================================================================================================================================================================================ Package Architecture Version Repository Size ================================================================================================================================================================================================================ Installing: ceph-common x86_64 2:16.2.15-0.el8 Ceph 24 M Installing dependencies: gperftools-libs x86_64 1:2.7-9.el8 epel 306 k ...此处省略... userspace-rcu x86_64 0.10.1-4.el8 baseos 100 k Transaction Summary ================================================================================================================================================================================================================ Install 23 Packages Total download size: 40 M Installed size: 133 M Downloading Packages: (1/23): python3-prettytable-0.7.2-14.el8.noarch.rpm 213 kB/s | 43 kB 00:00 ...此处省略... (23/23): ceph-common-16.2.15-0.el8.x86_64.rpm 7.6 MB/s | 24 MB 00:03 ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- Total 8.8 MB/s | 40 MB 00:04 Running transaction check Transaction check succeeded. Running transaction test Transaction test succeeded. Running transaction Preparing : 1/1 Installing : librdmacm-48.0-1.el8.x86_64 1/23 ...此处省略... Verifying : libunwind-1.3.1-3.el8.x86_64 23/23 Installed: ceph-common-2:16.2.15-0.el8.x86_64 gperftools-libs-1:2.7-9.el8.x86_64 leveldb-1.22-1.el8.x86_64 libcephfs2-2:16.2.15-0.el8.x86_64 liboath-2.6.2-3.el8.x86_64 librabbitmq-0.9.0-5.el8_9.x86_64 librados2-2:16.2.15-0.el8.x86_64 libradosstriper1-2:16.2.15-0.el8.x86_64 librbd1-2:16.2.15-0.el8.x86_64 librdkafka-1.6.1-1.el8.x86_64 librdmacm-48.0-1.el8.x86_64 librgw2-2:16.2.15-0.el8.x86_64 libunwind-1.3.1-3.el8.x86_64 lttng-ust-2.8.1-11.el8.x86_64 python3-ceph-argparse-2:16.2.15-0.el8.x86_64 python3-ceph-common-2:16.2.15-0.el8.x86_64 python3-cephfs-2:16.2.15-0.el8.x86_64 python3-prettytable-0.7.2-14.el8.noarch python3-pyyaml-3.12-12.el8.x86_64 python3-rados-2:16.2.15-0.el8.x86_64 python3-rbd-2:16.2.15-0.el8.x86_64 python3-rgw-2:16.2.15-0.el8.x86_64 userspace-rcu-0.10.1-4.el8.x86_64 Complete!

f)将主机添加到集群中

f1)配置集群的公共SSH公钥至另外两个节点:

[root@hydrogen1 ~]# ssh-copy-id -f -i /etc/ceph/ceph.pub root@hydrogen2 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/etc/ceph/ceph.pub" Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'root@hydrogen2'" and check to make sure that only the key(s) you wanted were added. [root@hydrogen1 ~]# ssh-copy-id -f -i /etc/ceph/ceph.pub root@hydrogen3 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/etc/ceph/ceph.pub" Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'root@hydrogen3'" and check to make sure that only the key(s) you wanted were added.

f2)添加其它两个节点加入Ceph集群

[root@hydrogen1 ~]# ceph orch host add hydrogen2 172.16.100.132 --labels _admin Added host 'hydrogen2' with addr '172.16.100.132' [root@hydrogen1 ~]# ceph orch host add hydrogen3 172.16.100.133 --labels _admin Added host 'hydrogen3' with addr '172.16.100.133'

f3)验证查看Ceph纳管的所有节点

[root@hydrogen1 ~]# ceph orch host ls #下面列表STATUS状态为空时表示节点纳管正常 HOST ADDR LABELS STATUS hydrogen1 172.16.100.131 _admin hydrogen2 172.16.100.132 _admin hydrogen3 172.16.100.133 _admin 3 hosts in cluster

3、添加OSD存储(以下所有操作在Ceph节点1执行)

a)执行(ceph orch device ls)命令查看并确定将被配置为OSD设备的名称

[root@hydrogen1 ~]# ceph orch device ls HOST PATH TYPE DEVICE ID SIZE AVAILABLE REFRESHED REJECT REASONS hydrogen1 /dev/sda hdd WDC_WUH721816AL_2PHD3WLJ 14.5T Yes 3s ago hydrogen1 /dev/sdb hdd WDC_WUH721816AL_3MGB7Y6U 14.5T Yes 3s ago hydrogen1 /dev/sdc hdd WDC_WUH721816AL_2BJAZZYD 14.5T Yes 3s ago hydrogen1 /dev/sdd hdd WDC_WUH721816AL_2BJAWEHD 14.5T Yes 3s ago hydrogen2 /dev/sda hdd WDC_WUH721816AL_2PHBJA5J 14.5T Yes 13m ago hydrogen2 /dev/sdb hdd WDC_WUH721816AL_3FHXVBNT 14.5T Yes 13m ago hydrogen2 /dev/sdc hdd WDC_WUH721816AL_2PGZPNPT 14.5T Yes 13m ago hydrogen2 /dev/sdd hdd WDC_WUH721816AL_3FJWMS2T 14.5T Yes 13m ago hydrogen3 /dev/sda hdd WDC_WUH721816AL_2BJ9L55D 14.5T Yes 6m ago hydrogen3 /dev/sdb hdd WDC_WUH721816AL_2BJAUKYD 14.5T Yes 6m ago hydrogen3 /dev/sdc hdd WDC_WUH721816AL_2BJAUP5D 14.5T Yes 6m ago hydrogen3 /dev/sdd hdd WDC_WUH721816AL_2BJAUUHD 14.5T Yes 6m ago

b)执行(ceph orch daemon add osd Hostname:/dev/sdx)命令添加所有节点中将被配置为OSD设备的磁盘

[root@hydrogen1 ~]# ceph orch daemon add osd hydrogen1:/dev/sda Created osd(s) 0 on host 'hydrogen1' [root@hydrogen1 ~]# ceph orch daemon add osd hydrogen1:/dev/sdb Created osd(s) 1 on host 'hydrogen1' [root@hydrogen1 ~]# ceph orch daemon add osd hydrogen1:/dev/sdc Created osd(s) 2 on host 'hydrogen1' [root@hydrogen1 ~]# ceph orch daemon add osd hydrogen1:/dev/sdd Created osd(s) 3 on host 'hydrogen1' [root@hydrogen1 ~]# ceph orch daemon add osd hydrogen2:/dev/sda Created osd(s) 4 on host 'hydrogen2' [root@hydrogen1 ~]# ceph orch daemon add osd hydrogen2:/dev/sdb Created osd(s) 5 on host 'hydrogen2' [root@hydrogen1 ~]# ceph orch daemon add osd hydrogen2:/dev/sdc Created osd(s) 6 on host 'hydrogen2' [root@hydrogen1 ~]# ceph orch daemon add osd hydrogen2:/dev/sdd Created osd(s) 7 on host 'hydrogen2' [root@hydrogen1 ~]# ceph orch daemon add osd hydrogen3:/dev/sda Created osd(s) 8 on host 'hydrogen3' [root@hydrogen1 ~]# ceph orch daemon add osd hydrogen3:/dev/sdb Created osd(s) 9 on host 'hydrogen3' [root@hydrogen1 ~]# ceph orch daemon add osd hydrogen3:/dev/sdc Created osd(s) 10 on host 'hydrogen3' [root@hydrogen1 ~]# ceph orch daemon add osd hydrogen3:/dev/sdd Created osd(s) 11 on host 'hydrogen3'

c)查验OSD设备列表,所有磁盘状态处于“up”表示添加正常。

[root@hydrogen1 ~]# ceph osd tree ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF -1 174.63226 root default -3 58.21075 host hydrogen1 0 hdd 14.55269 osd.0 up 1.00000 1.00000 1 hdd 14.55269 osd.1 up 1.00000 1.00000 2 hdd 14.55269 osd.2 up 1.00000 1.00000 3 hdd 14.55269 osd.3 up 1.00000 1.00000 -5 58.21075 host hydrogen2 4 hdd 14.55269 osd.4 up 1.00000 1.00000 5 hdd 14.55269 osd.5 up 1.00000 1.00000 6 hdd 14.55269 osd.6 up 1.00000 1.00000 7 hdd 14.55269 osd.7 up 1.00000 1.00000 -7 58.21075 host hydrogen3 8 hdd 14.55269 osd.8 up 1.00000 1.00000 9 hdd 14.55269 osd.9 up 1.00000 1.00000 10 hdd 14.55269 osd.10 up 1.00000 1.00000 11 hdd 14.55269 osd.11 up 1.00000 1.00000

4、查验集群健康状态

[root@hydrogen1 ~]# ceph -s

cluster:

id: fe840364-7bc6-11ef-98cd-e43d1a0a3210

health: HEALTH_OK

services:

mon: 3 daemons, quorum hydrogen1,hydrogen2,hydrogen3 (age 2h)

mgr: hydrogen2.desouk(active, since 2h), standbys: hydrogen1.knyqls

osd: 12 osds: 12 up (since 8m), 12 in (since 8m)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 3.4 GiB used, 175 TiB / 175 TiB avail

pgs: 1 active+clean

四、Ceph-Common扩展

1、扩展其他节点安装Ceph-Common命令工具,以便在本地也支持ceph基本命令。

2、拷贝Ceph节点1仓库文件至其它节点(该操作在Ceph节点1执行)

[root@hydrogen1 ~]# scp /etc/yum.repos.d/ceph.repo root@hydrogen2:/etc/yum.repos.d ceph.repo 100% 513 1.0MB/s 00:00 [root@hydrogen1 ~]# scp /etc/yum.repos.d/ceph.repo root@hydrogen3:/etc/yum.repos.d ceph.repo 100% 513 1.1MB/s 00:00

3、安装Ceph-Common工具包(该操作分别在Ceph节点2和Ceph节点3上执行):dnf install -y ceph-common

[root@hydrogen3 ~]# ceph -v -bash: ceph: command not found [root@hydrogen3 ~]# dnf install -y ceph-common Ceph x86_64 613 kB/s | 77 kB 00:00 Ceph noarch 81 kB/s | 15 kB 00:00 Ceph SRPMS 19 kB/s | 1.7 kB 00:00 Dependencies resolved. ================================================================================================================================================================================================================ Package Architecture Version Repository Size ================================================================================================================================================================================================================ Installing: ceph-common x86_64 2:16.2.15-0.el8 Ceph 24 M Installing dependencies: gperftools-libs x86_64 1:2.7-9.el8 epel 306 k ...此处省略... userspace-rcu x86_64 0.10.1-4.el8 baseos 100 k Transaction Summary ================================================================================================================================================================================================================ Install 23 Packages Total download size: 40 M Installed size: 133 M Downloading Packages: (1/23): python3-prettytable-0.7.2-14.el8.noarch.rpm 580 kB/s | 43 kB 00:00 ...此处省略... (23/23): liboath-2.6.2-3.el8.x86_64.rpm 18 kB/s | 59 kB 00:03 ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- Total 6.2 MB/s | 40 MB 00:06 Ceph x86_64 3.8 kB/s | 1.1 kB 00:00 Importing GPG key 0x460F3994: Userid : "Ceph.com (release key) <security@ceph.com>" Fingerprint: 08B7 3419 AC32 B4E9 66C1 A330 E84A C2C0 460F 3994 From : https://mirrors.aliyun.com/ceph/keys/release.gpg Key imported successfully Running transaction check Transaction check succeeded. Running transaction test Transaction test succeeded. Running transaction Preparing : 1/1 Installing : librdmacm-48.0-1.el8.x86_64 1/23 ...此处省略... Verifying : libunwind-1.3.1-3.el8.x86_64 23/23 Installed: ceph-common-2:16.2.15-0.el8.x86_64 gperftools-libs-1:2.7-9.el8.x86_64 leveldb-1.22-1.el8.x86_64 libcephfs2-2:16.2.15-0.el8.x86_64 liboath-2.6.2-3.el8.x86_64 librabbitmq-0.9.0-5.el8_9.x86_64 librados2-2:16.2.15-0.el8.x86_64 libradosstriper1-2:16.2.15-0.el8.x86_64 librbd1-2:16.2.15-0.el8.x86_64 librdkafka-1.6.1-1.el8.x86_64 librdmacm-48.0-1.el8.x86_64 librgw2-2:16.2.15-0.el8.x86_64 libunwind-1.3.1-3.el8.x86_64 lttng-ust-2.8.1-11.el8.x86_64 python3-ceph-argparse-2:16.2.15-0.el8.x86_64 python3-ceph-common-2:16.2.15-0.el8.x86_64 python3-cephfs-2:16.2.15-0.el8.x86_64 python3-prettytable-0.7.2-14.el8.noarch python3-pyyaml-3.12-12.el8.x86_64 python3-rados-2:16.2.15-0.el8.x86_64 python3-rbd-2:16.2.15-0.el8.x86_64 python3-rgw-2:16.2.15-0.el8.x86_64 userspace-rcu-0.10.1-4.el8.x86_64 Complete! [root@hydrogen3 ~]# ceph -v ceph version 16.2.15 (618f440892089921c3e944a991122ddc44e60516) pacific (stable)